Logistics¶

- Lecture: Tu/Th 2:00 - 3:20 PM

- Discussion: Th 3:30 - 4:20 PM

- Location: Olmsted 1431

- Instructor: Zhe Fei (Olmsted 1344), zhef@ucr.edu

- Office Hours: Th 11:00 - 2:00 PM, or by appointment.

Description¶

4 Units, Lecture, 3 hours; discussion, 1 hour. Prerequisite(s): STAT 201A and STAT 206 or equivalents; or consent of instructor. Computational algorithms for research in statistics. Topics include Numerical linear algebra, Numerical optimization, Monte Carlo methods, Bootstrap and resampling methods.

Textbook¶

Kenneth Lange, Numerical Analysis for Statisticians, 2nd Edition, Springer (2010), available online, UCR library link

James Gentle, Computational Statistics, Springer (2009), available online, UCR library link

Discussion¶

Coding and implementation;

Mini project and presentation.

Assignments¶

Expected 4 homework assignments (40%).

You are welcomed, and encouraged, to work with each other on the problems, but you must turn in your own work. If you copy someone else's work, both parties will receive a 0 for the homework grade as well as being reported to the Student Conduct & Academic Integrity Programs (SCAIP).

Submission instructions: You will turn in your homework on elearn.ucr.edu, with both source codes and output files.

Discussion project¶

A mini project of topics learned in class, with coding, implementation, and presentation.

Final project¶

A real data application of topics learned in class, write a draft paper with detailed coding, implementation, and results.

Attendance¶

Attendance to both lectures and discussions is mandatory. If you are not able to attend a lecture or lab due to medical or technical reasons, please proactively communicate with the instructor and TA and notify us your circumstances at earliest chance.

Grading¶

Homework, 40%

Discussion project and presentation, 20%

Attendance, 20%

Final project, 20%

Grades may be curved at the end. Cumulative numerical averages of 90-100 are guaranteed at least an A-, 80-89 at least a B-, and 70 - 79 at least a C-. However the exact ranges for letter grades will be determined after the final project.

Work Load and Teamwork¶

You are expected to put in about 3 - 4 hours of work outside of class for each hour of lecture. Some of you will do well with less time, and some might need more. You are encouraged to study with your classmates. But remember that anything that is not explicitly a team assignment must be your own work.

Policies¶

You are responsible for checking announcements and accessing course materials on Canvas.

Late work policy for the homework and labs reports:

- next day: lose 50% of total possible points

- later than next day: lose all points

There will be no make-ups for homework, labs, quizzes, or exams. If the midterm exam must be missed, absence must be officially excused in advance, in which case the missing exam score will be imputed using the final exam score. This policy only applies to the midterm. All other missed assessments will receive a grade of 0. The final exam must be taken at the stated time. You must take the final exam to pass this course.

Please be considerate of your classmates by arriving on time. If you arrive after at least one student has finished the exam and left the room, you will NOT be allowed to sit for the exam, and will receive a “0”. Turn off cell phones before entering the exam room. If your cell phone rings during the exam, you will lose points on the exam.

Use of disallowed materials (textbook, class notes, web references, any form of communication with classmates or other persons, etc.) during exams will not be tolerated. This will result in a 0 on the exam for all students involved, possible failure of the course, and will be reported to the Student Conduct & Academic Integrity Programs (SCAIP). If you have any questions about whether something is or is not allowed, ask me beforehand.

Tentative Topics¶

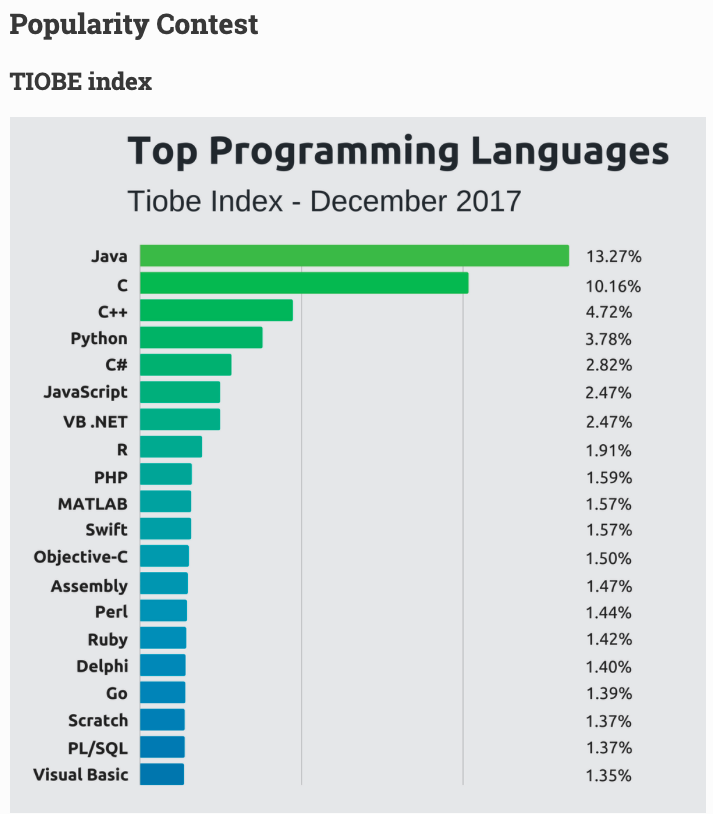

Numerical linear algebra: vectors and matrices; eigenvalues; SVD;

Numerical optimization: MM; EM; Newton's method;

Linear Programming;

Resampling methods: Monte Carlo; bootstrap; cross validation;

Advanced optimization topics

Quotes¶

"All models are wrong, but some are useful." - George Box

"Statistics is partly empirical and partly mathematical. It is now almost entirely computational." - Kenneth Lange

"A data scientist is someone who is better at statistics than any software engineer and better at software engineering than any statistician." - Josh Wills of Cloudera

Large Language Models¶

A groundbreaking evolution in artificial intelligence.

These models are deep neural networks—often with billions of parameters—that have been pre-trained on vast datasets, enabling them to understand and generate human-like text.

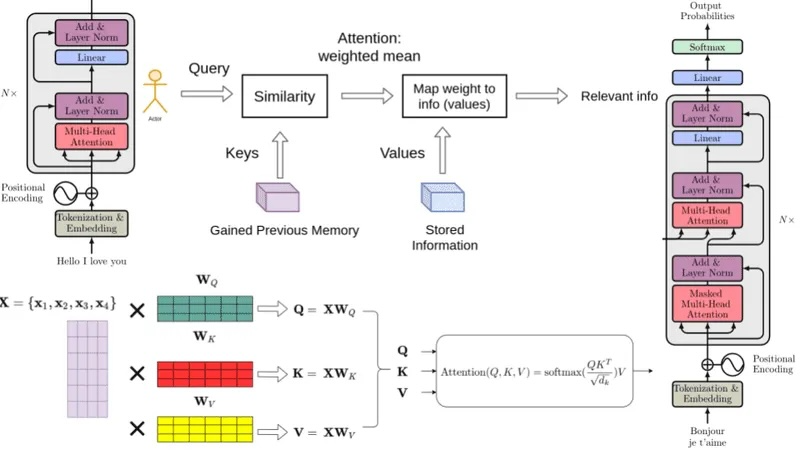

Built on the transformer architecture, LLMs excel at a wide range of language tasks, from translation and summarization to creative writing and dialogue generation.

Reasoning¶

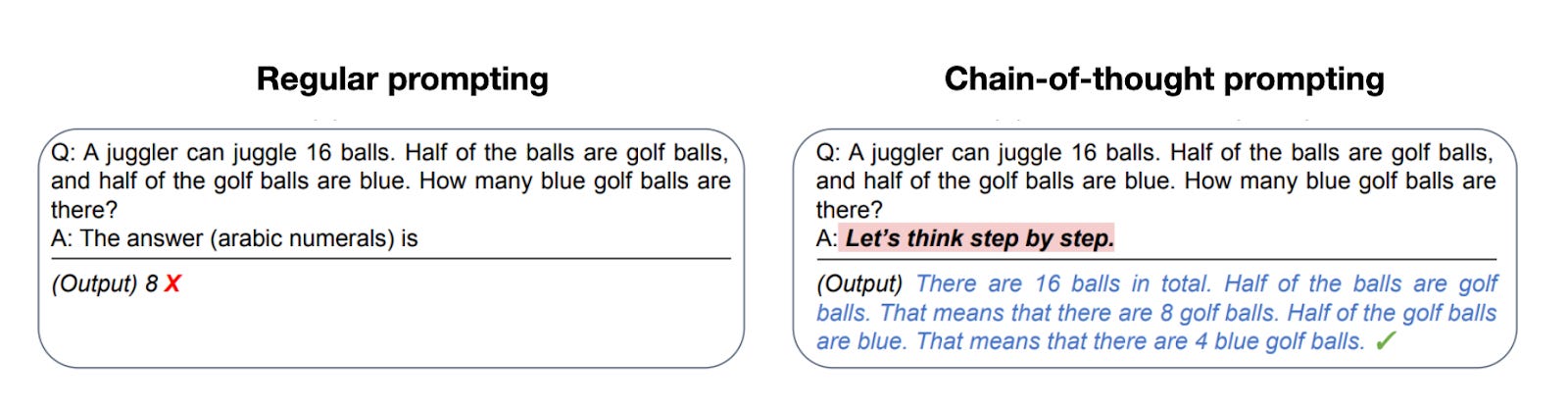

An LLM-based reasoning model is an LLM designed to solve multi-step problems by generating intermediate steps or structured "thought" processes.

Unlike simple question-answering LLMs that just share the final answer, reasoning models either explicitly display their thought process or handle it internally, which helps them to perform better at complex tasks such as puzzles, coding challenges, and mathematical problems.

Inference-Time Scaling: humans give better responses when given more time to think.

- Chain-of-Thought

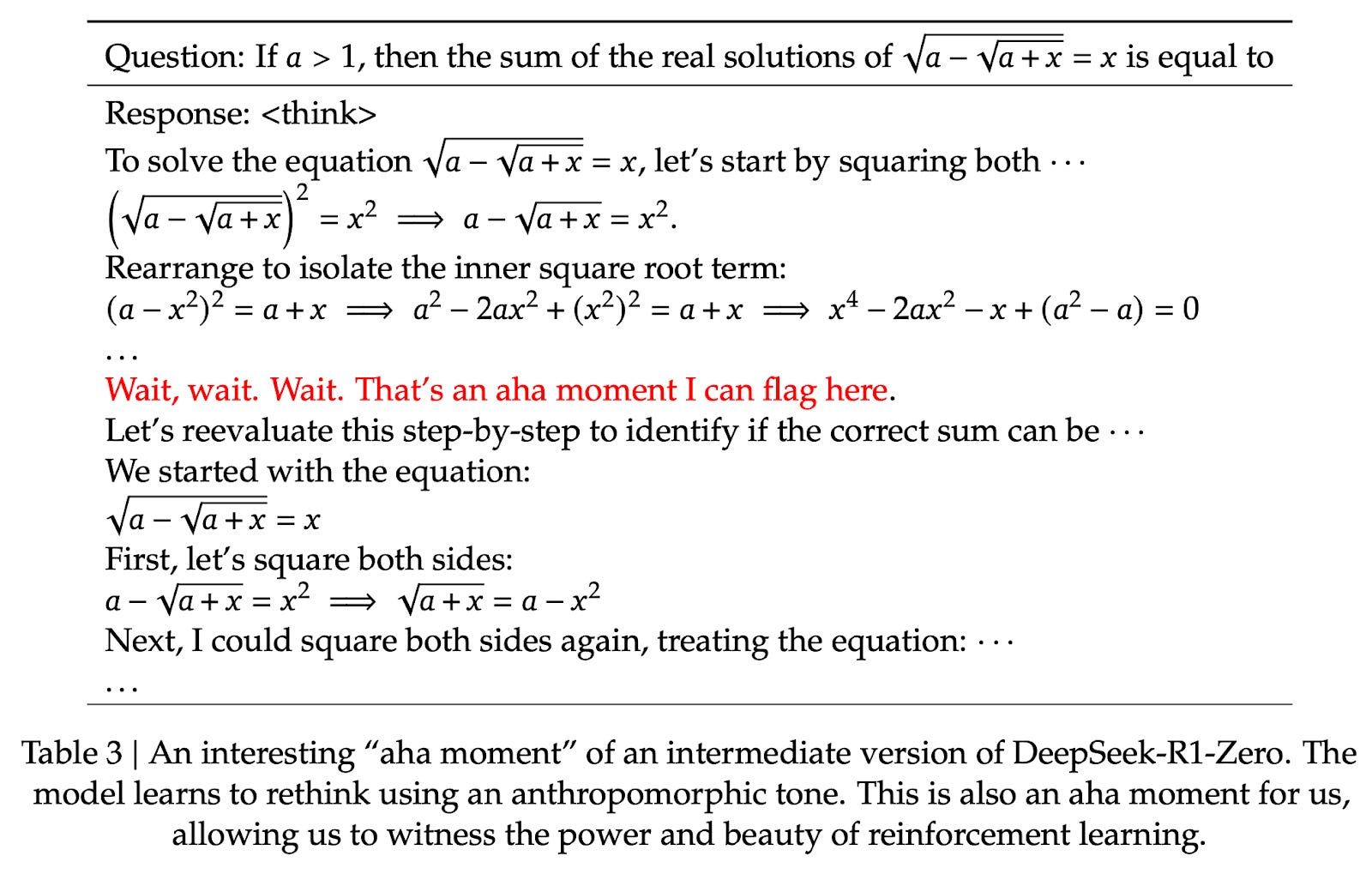

Pure Reinforcement Learning (RL):

- A mini-R1 example, https://www.philschmid.de/mini-deepseek-r1

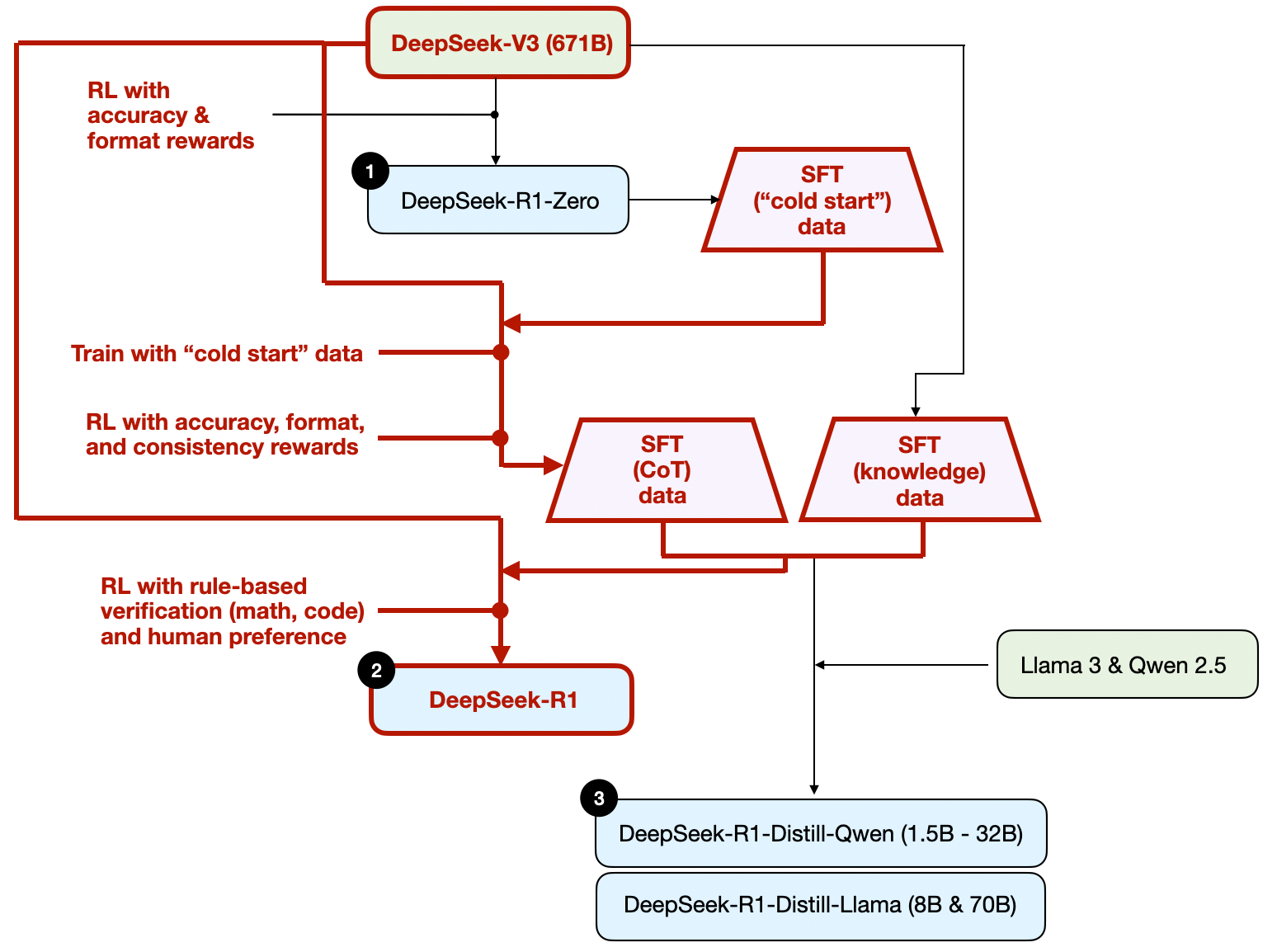

Supervised finetuning and reinforcement learning (SFT + RL)

- Typically, a model is first trained with SFT on high-quality instruction data and then further refined using RL to optimize specific behaviors.

Supervised fine-tuning and model distillation

- Fine-tuning smaller LLMs, such as Llama 8B and 70B and Qwen 2.5 models (0.5B to 32B), on a high-quality SFT dataset generated by larger LLMs.

Use of AI¶

AI tools can greatly improve our learning abilities, and work efficiency.

Tell AI what to do, ask the right questions;

AI as helpers;

Never let AI do it for you;

Always verify and double check AI answers.

How is it related to our class?¶

Transformers¶

Foundations in Statistical Computing¶

Large Language Models (LLMs) are built upon a wealth of statistical and computational techniques that are fundamental to advanced statistical computing.

Loss Functions: Mean Squared Error (MSE), Cross-Entropy Loss, and Kullback-Leibler Divergence (KL Divergence).

Matrix Operations and Decompositions: large-scale matrix operations with techniques like Singular Value Decomposition (SVD) and eigenvalue computations.

Optimization Algorithms: Stochastic Gradient Descent (SGD) and Adam (Adaptive Moment Estimation); iteratively update millions (or billions) of parameters in LLMs or other deep learning models.

Regularization Techniques: L1/L2 penalties or dropout. To prevent overfitting and enhances generalization.

Installation¶

Environment management¶

What are environments: a Python environment is a self-contained directory that contains a specific Python version and various packages.

Why it's crucial: different projects may require different versions of Python or libraries, and environments prevent conflicts between these requirements.

conda for environment management¶

Download miniforge here, https://github.com/conda-forge/miniforge

open your terminal/command prompt

conda create --name stat_207 python=3.10

conda activate stat_207

conda install numpy

conda deactivate

conda env remove --name myenv

Visual Studio Code (VS Code)¶

a lightweight, cross-platform code editor with an extensive extension system

get started with VS code, https://code.visualstudio.com/docs/setup/setup-overview

(Optional)¶

- Google Colab: https://colab.research.google.com/

For deep learning and training deep neural networks,

Resources: